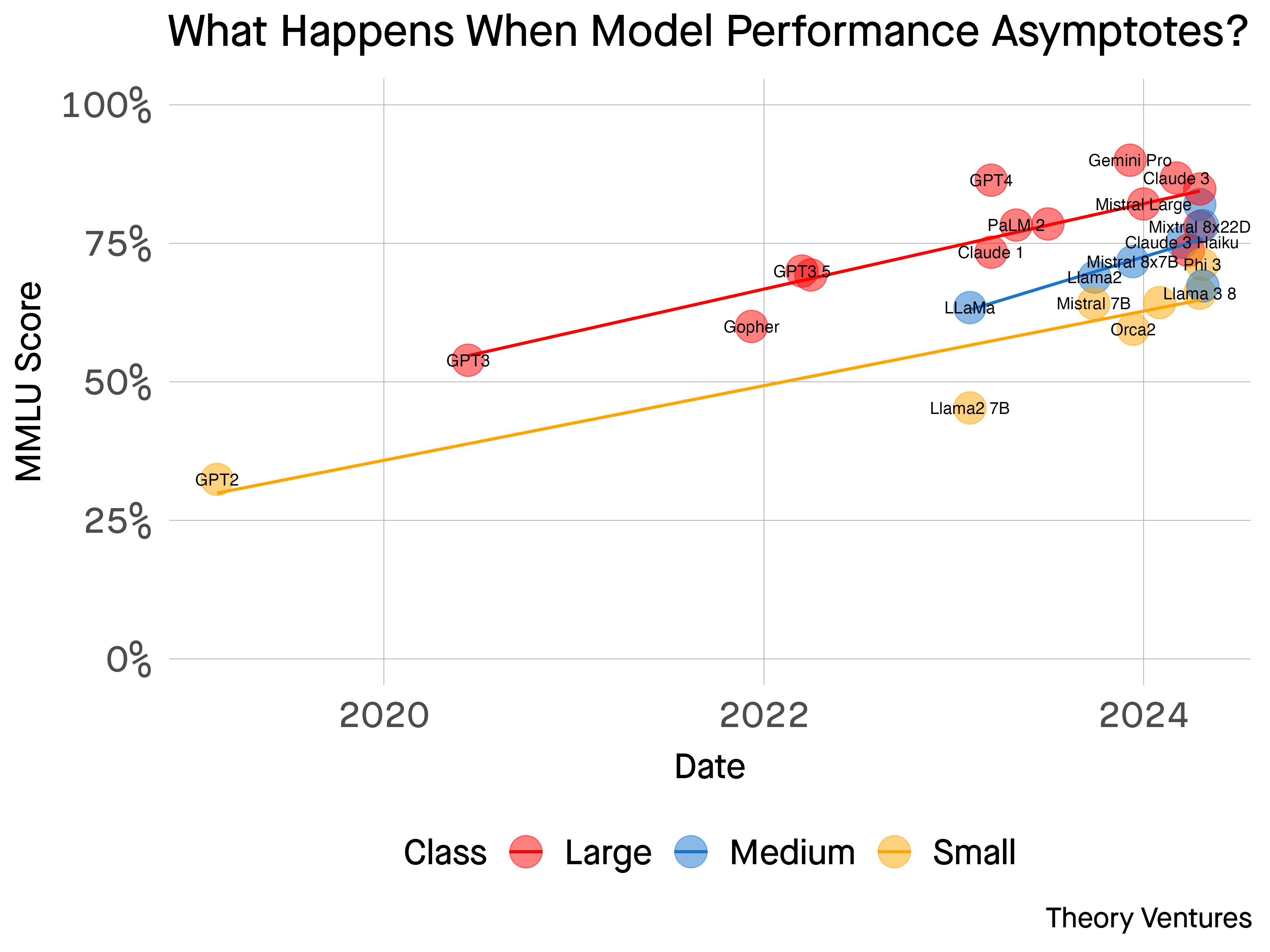

Snowflake introduced Artic, their open 17b mannequin. The LLM perfomance chart is replete with new choices in just some weeks. Total data efficiency is asymptoting as anticipated. It’s laborious to discern the newest dots.

One factor stands out from the announcement – the positioning of the mannequin.

“The Greatest LLM for Enterprise AI”

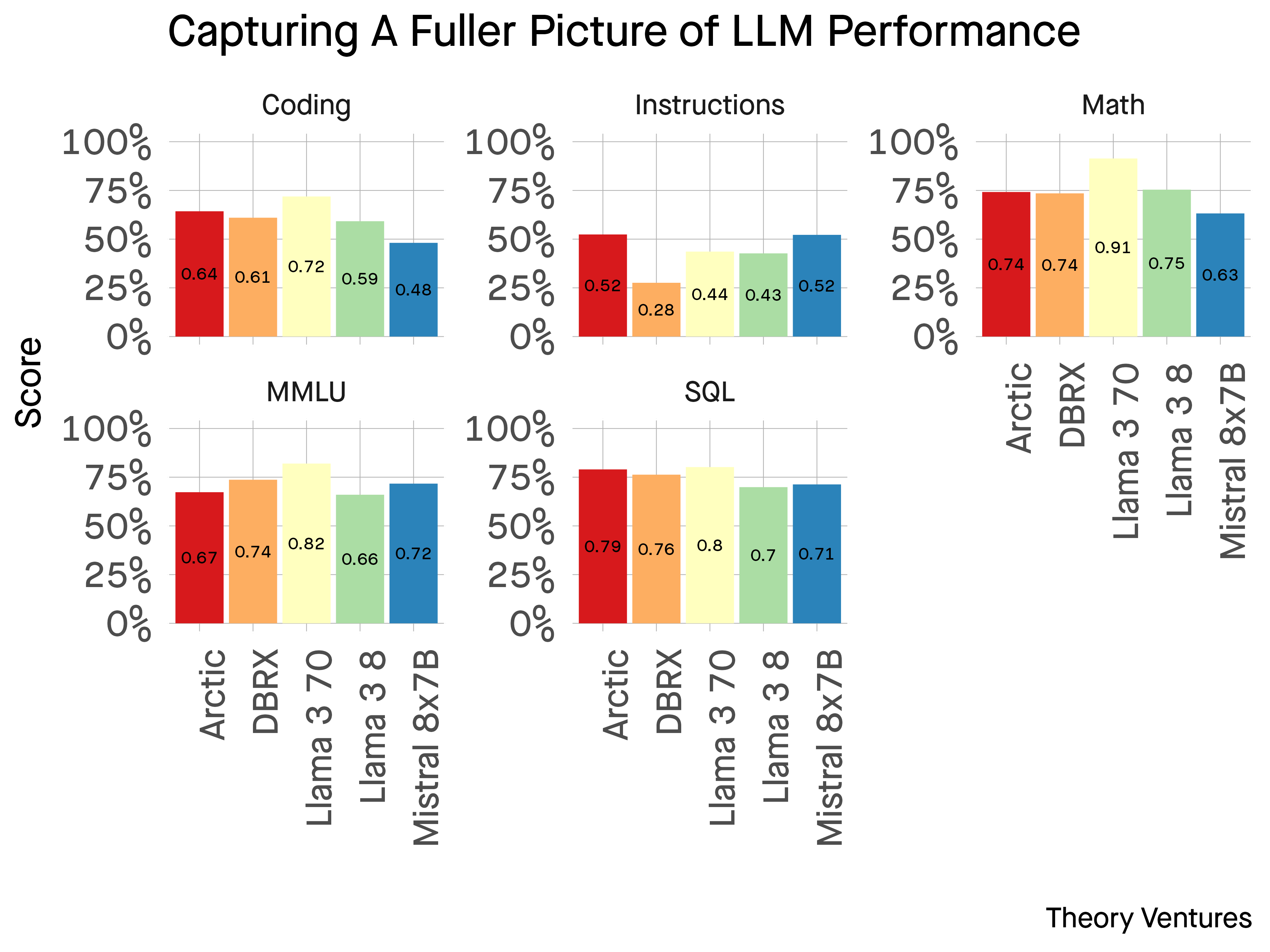

Snowflake focuses on the mannequin’s enterprise efficiency : SQL era, code completion, & logic. This push will likely be echoed by others as fashions begin to specialize.

When Databricks/Mosaic launched DBRX, their announcement targeted on openness, coaching effectivity, & inference effectivity.

This can be a shift from the general-purpose advertising of LLMs like GPT-4, Llama3, & Orca. Arctic & DBRX are B2B fashions.

With the MMLU basic data (highschool equivalency) rating now asymptoting, patrons of fashions will start to care about completely different attributes. For a data-focused buyer base, SQL era, code completion for Python, & following directions matter greater than encyclopedic data of Napoleon’s doomed march to Moscow.

The push to smaller fashions is one other type of differentiation. Smaller fashions are a lot inexpensive to function : two orders of magnitude or extra.

Meta’s most up-to-date small mannequin, Llama3 8b parameter, demonstrates small “over-trained” fashions can carry out equally, in addition to its bigger brothers.

That is all excellent news for founders & customers : extra fashions, extra selection, higher efficiency throughout domains, decrease prices for coaching, tuning, & inference. If the latest weeks are any indication, we must always count on additional flurries of advances & specialization.